Back

Projects

A Medical Research Methodology Querying System based on Llama-3.2 trained with LoRA

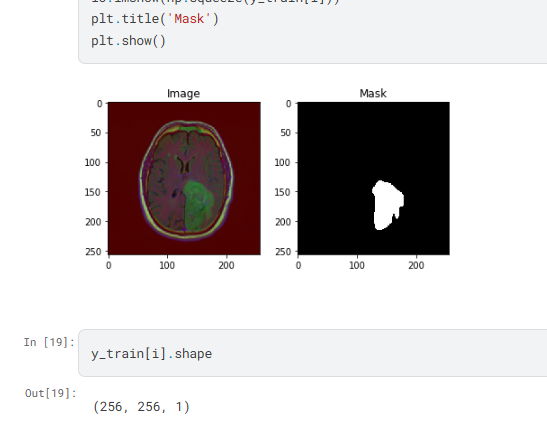

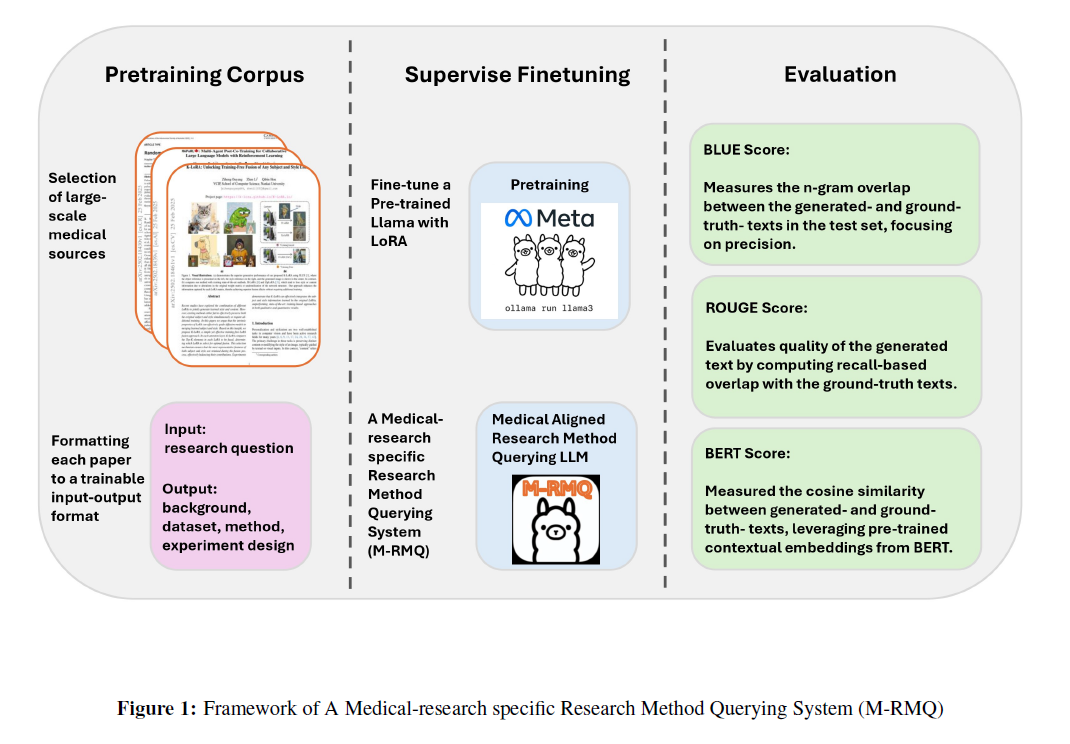

This project explores medical AI system based on Llama-3.2-1B-it trained with LoRA to assist researchers with planning of methods, data subjects and experiments. We utilize the expert-labeled subset of PubMedQA, which includes 1,000 high-quality samples containing research questions and corresponding long answers with methodological insights.

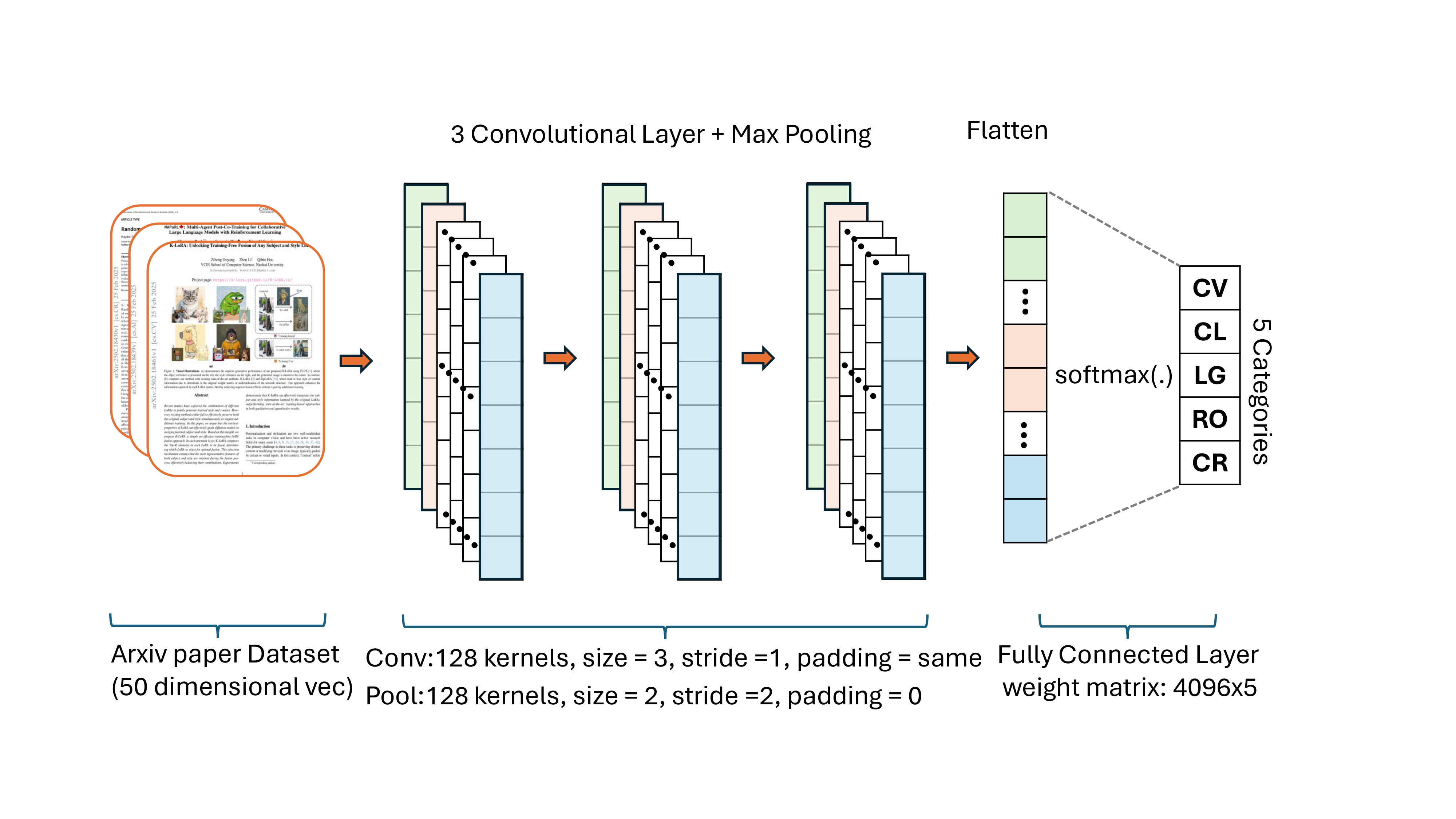

ArXiv Paper Classification using CNN and Glove

We address the challenge of accurately classifying research papers by exploring the strength of the CNN model and employ it to efficiently extract hierarchical and local features from textual data. GloVe embeddings enhance the semantic representation of the input data, transforming words into dense vector representations enriched with pre-trained semantic knowledge.

Machine-Generated Text Attribution

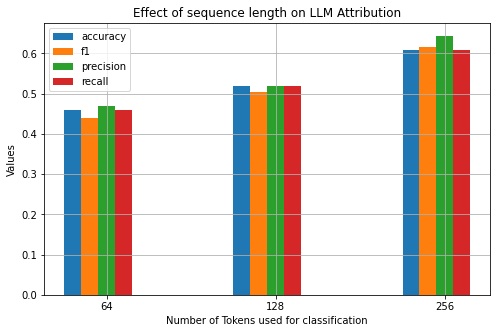

Trained BERT Sequence classifier on a novel dataset created using 5 generative models (GPT-2-small, GPT-2XL, Phi-2, Falcon-7B, Mistral-7B-it) by prompting with text sourced from Wikipedia and GSM8K Math dataset, achieving F1-score of 0.65. and studied the impact of input length, prompt domain, and LLM parameter size on attribution accuracy.